Overview

UrbanSyn is a diverse, compact, and photorealistic dataset that provides more than 7.5k synthetic annotated images. It was born to address the synth-to-real domain gap, contributing to unprecedented synthetic-only baselines used by domain adaptation (DA) methods.

Reduce the synth-to-real domain gap

UrbanSyn dataset helps to reduce the domain gap by contributing to unprecedented synthetic-only baselines used by domain adaptation (DA) methods.

Open for research and commercial purposes

UrbanSyn may be used for research and commercial purposes. It is released publicly under the Creative Commons Attribution-Commercial-ShareAlike 4.0 license. For detailed information, please check our terms of use.

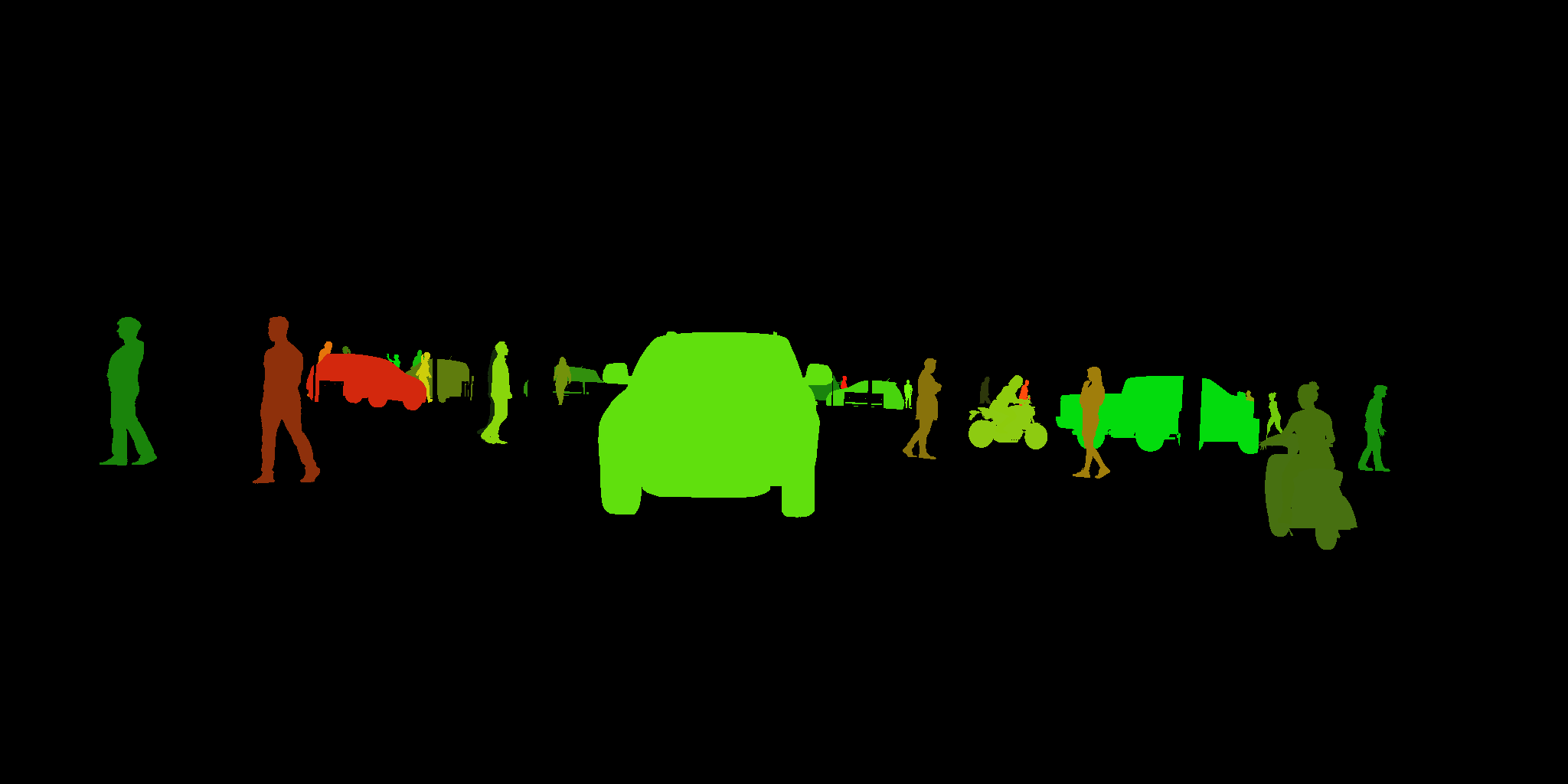

Ground-truth annotations

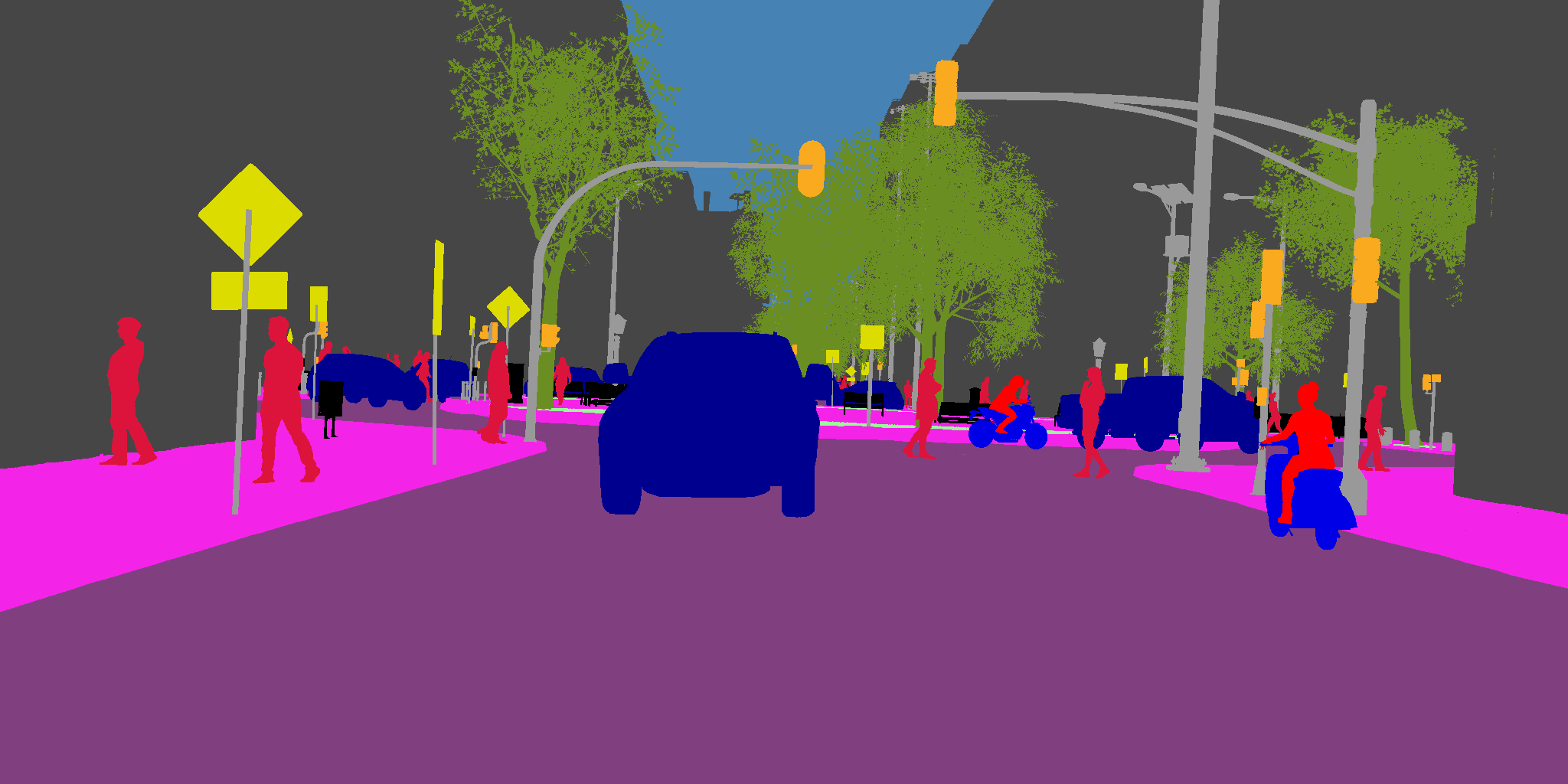

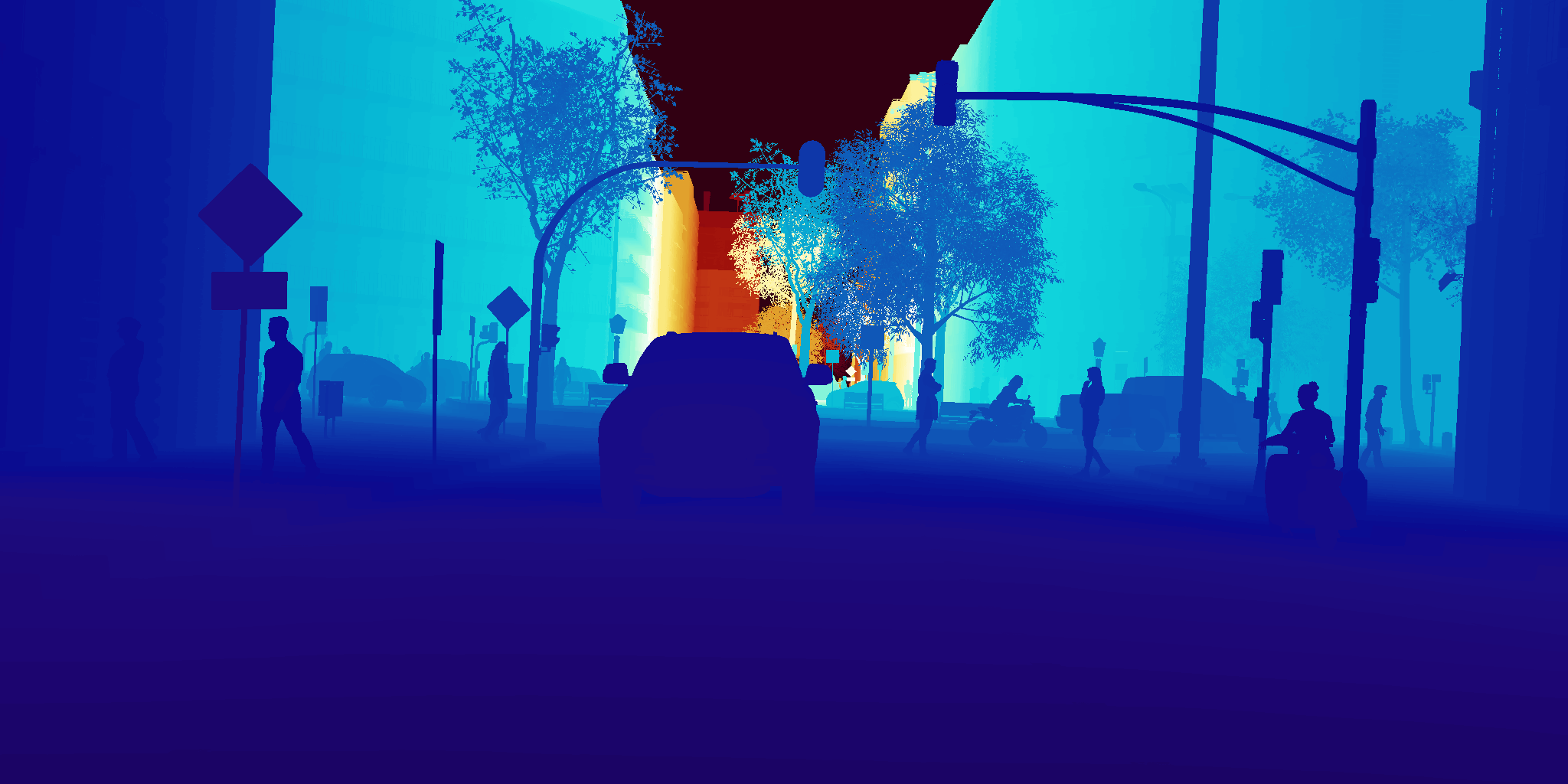

UrbanSyn comes with photorealistic color images, per-pixel semantic segmentation, depth, instance panoptic segmentation, and 2-D bounding boxes. To see some examples of per-pixel ground-truth, please check our examples of annotations.

High-degree of photorealism

UrbanSyn features highly realistic and curated driving scenarios leveraging procedurally-generated content and high-quality curated assets. To achieve UrbanSyn photorealism we leverage industry-standard unbiased path-tracing and AI-based denoising techniques.

Annotations (Ground-Truth)

UrbanSyn brings per-pixel ground-truth semantic segmentation, scene depth, instance panoptic segmentation and 2-D bounding boxes. Check some of our examples: